3.3. Conditional Feature Importance#

Conditional Feature Importance (CFI) is a model-agnostic approach for quantifying the relevance of individual or groups of features in predictive models. It is a perturbation-based method that compares the predictive performance of a model on unmodified test data—following the same distribution as the training data— to its performance when the studied feature is conditionally perturbed. Thus, this approach does not require retraining the model.

3.3.1. Theoretical index#

Conditional Feature Importance (CFI) is a model-agnostic method for estimating feature importance through conditional perturbations. Specifically, it constructs a perturbed version of the feature \(X_j^P\), sampled independently from the conditional distribution \(P(X_j | X_{-j})\), such that its association with the output is removed: \(X_j^P \perp Y \mid X^{-j}\). The predictive model is then evaluated on the modified feature vector \(\tilde X = [X_1, ..., X_j^P, ..., X_p]\), and the importance of the feature is quantified by the resulting drop in model performance.

The target quantity estimated by CFI is the Total Sobol Index (TSI) Total Sobol Index. Indeed,

Where in regression, \(\mu_{-j}(X_{-j}) = \mathbb{E}[Y| X_{-j}]\) is the theoretical model without the \(j^{th}\) feature.

3.3.2. Estimation procedure#

The estimation of CFI relies on the ability to sample the perturbed feature matrix \(\tilde X\), and specifically to sample \(X_j^p\) independently from the conditional distribution, \(X_j^p \overset{\text{i.i.d.}}{\sim} P(X_j | X_{-j})\), while breaking the association with the output \(Y\). Any conditional sampler can be used. A valid and efficient approach is conditional permutation (Chamma et al.[1]). This procedure decomposes the \(j^{th}\) feature into a part that is predictable from the other features and a residual term that is independent of the other features:

Here \(\nu_j(X_{-j}) = \mathbb{E}[X_j | X_{-j}]\) is the conditional expectation of \(X_j\) given the other features. In practice, \(\nu_j\) is unknown and has to be estimated from the data using a predictive model.

Then the perturbed feature \(X_j^p\) is generated by keeping the predictable part \(\nu_j(X_{-j})\) unchanged, and by replacing the residual \(\epsilon_j\) by a randomly permuted version \(\epsilon_j^p\):

Note

Estimation of \(\nu_j\)

To generate the perturbed feature \(X_j^p\), a model for \(\nu_j\) is required. Estimating \(\nu_j\) amounts to modeling the relationship between features and is arguably an easier task than estimating the relationship between features and the target. This ‘model-X’ assumption was for instance argued in Chamma et al.[1], Candes et al.[2]. For example, in genetics, features such as single nucleotide polymorphisms (SNPs) are the basis of complex biological processes that result in an outcome (phenotype), such as a disease. Predicting the phenotype from SNPs is challenging, whereas modeling the relationships between SNPs is often easier due to known correlation structures in the genome (linkage disequilibrium). As a result, simple predictive models such as regularized linear models or decision trees can be used to estimate \(\nu_j\).

3.3.3. Inference#

Under standard assumptions such as additive model: \(Y = \mu(X) + \epsilon\), Conditional Feature Importance (CFI) allows for conditional independence testing, which determines if a feature provides any unique information to the model’s predictions that isn’t already captured by the other features. Essentially, we are testing whether the output is independent from the studied feature given the rest of the input:

The core of this inference is to test the statistical significance of the loss differences estimated by CFI. Consequently, a one-sample test on the loss differences (or a paired test on the losses) needs to be performed.

Two technical challenges arise in this context:

When cross-validation (for instance, k-fold) is used to estimate CFI, the loss differences obtained from different folds are not independent. Consequently, performing a simple t-test on the loss differences is not valid. This issue can be addressed by a corrected t-test accounting for this dependence, such as the one proposed in Nadeau and Bengio[3].

Vanishing variance: Under the null hypothesis, even if the loss difference converges to zero, the variance of the loss differences also vanishes due to the quadratic functional (:footcite:t:verdinelli2024feature``) . This makes the standard one-sample t-test invalid. This second issue can be handled by correcting the variance estimate or using other nonparametric test.

3.3.4. Regression example#

The following example illustrates the use of CFI on a regression task with:

>>> from sklearn.datasets import make_regression

>>> from sklearn.linear_model import LinearRegression

>>> from sklearn.model_selection import train_test_split

>>> from hidimstat import CFI

>>> X, y = make_regression(n_features=2)

>>> X_train, X_test, y_train, y_test = train_test_split(X, y)

>>> model = LinearRegression().fit(X_train, y_train)

>>> cfi = CFI(estimator=model, imputation_model_continuous=LinearRegression())

>>> cfi = cfi.fit(X_train, y_train)

>>> features_importance = cfi.importance(X_test, y_test)

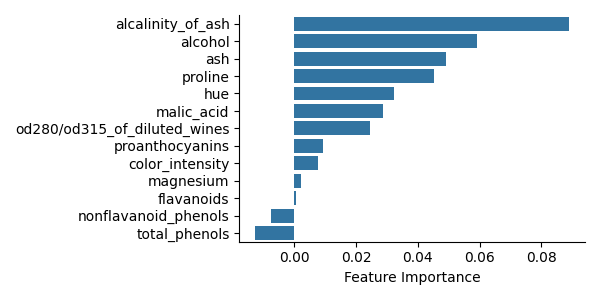

3.3.5. Classification example#

To measure feature importance in a classification task, a classification loss should be used, in addition, the prediction method of the estimator should output the corresponding type of prediction (probabilities or classes). The following example illustrates the use of CFI on a classification task:

>>> from sklearn.datasets import make_classification

>>> from sklearn.ensemble import RandomForestClassifier

>>> from sklearn.linear_model import LinearRegression

>>> from sklearn.metrics import log_loss

>>> from sklearn.model_selection import train_test_split

>>> from hidimstat import CFI

>>> X, y = make_classification(n_features=4)

>>> X_train, X_test, y_train, y_test = train_test_split(X, y)

>>> model = RandomForestClassifier().fit(X_train, y_train)

>>> cfi = CFI(

... estimator=model,

... imputation_model_continuous=LinearRegression(),

... loss=log_loss,

... method="predict_proba",

... )

>>> cfi = cfi.fit(X_train, y_train)

>>> features_importance = cfi.importance(X_test, y_test)